Boomi powers the future of business with intelligent integration and automation. As a category-leading, global software as a service (SaaS) company, Boomi celebrates more than 20,000 global customers and a worldwide network of 800 partners. Organizations turn to Boomi’s award-winning platform to connect their applications, data, and people to accelerate digital transformation.

Michael Bachman

Michael Bachman, with over two decades in IT, currently serves as the Head of Architecture and AI Strategy at the Boomi Innovation Group. His career includes significant roles at DHL, MTI Technology, Quantum, H.A. Storage, Dell | Compellent, Code 42, and Dell, where he focused on Dell storage architectures and the development of the Live Optics platform. Michael's expertise in architecting secure backup and sync environments and his leadership in AI strategy at Boomi highlight his profound impact on enterprise technology and innovative system integration.

Chris Cappetta

Chris Cappetta has a diverse work experience. Chris started their career as a Mountaineering Trip Leader at Camp Mondamin in 2013 and worked there until 2014. In 2010, they became a Men's Rugby Coach at Western State Colorado University and held the position until 2014. Chris then joined Corvisa, a ShoreTel company, as a Solutions Engineer in 2015 and worked there until 2016. Following that, they worked at ShoreTel as a Contact Center Solutions Architect from 2016 to 2016. In 2016, they also worked as an Innovation Sherpa at Green Glass Door. Currently, they are employed at Boomi, where they started as a Sales Engineer in 2017 and has since been promoted to Principal Solutions Architect in 2019.

Chris Cappetta has a Bachelor's degree in Politics and Government from Western Colorado University, which was obtained from 2010 to 2011. Prior to that, they studied Political Science and Government at the University of Pittsburgh from 2005 to 2007. Chris also holds a certification in Wilderness First Responder from the NOLS Wilderness Medicine Institute (WMI).

Episode Transcript

M.B: There is a lot of hype. You were talking about that before. What we want to do is turn the hype into reality. We have the pleasure of doing that daily, especially you. He calls himself the head button pusher because he's constantly on keyboards, trying to make sure that stuff works or tries to break it.

Making AI practical means understanding what goals are and orchestrating how we're going to make LLMs work for customers in various ways, such as RAG, fine-tuning, or agent building. That's what we do day to day.

C.C: Yeah, I'd add that the models are impressive as they are, but they can be made more impressive with the right logic, sequencing, steps, and extra data. Boomi has found itself in a phenomenal position with AI functionality in the platform. We do a lot of AI designing using the platform, which already handles data connectivity, orchestration, logic, and data transformation.

The AI models are just another point in a potentially 50-step process for us. This allows us to build out creative designs quickly, including AI, connectivity, mapping, and other elements. It's been a really enjoyable thing to explore.

Retrieval augmented generation

C.C: So RAG is retrieval augmented generation. It's a particular use case. If you ask Chat GPT to summarize something in your Salesforce org, it'll say, "I have no access to your Salesforce org," but you can take that extra step and retrieve the right or relevant information. Even if it's a big, ugly 30-page string of notes, you can feed that to one of these models and then ask your question, and it'll respond better.

All of that can happen programmatically. That's the concept of retrieval augmented generation. You're helping the models respond better by getting information they don't have access to or that's outside their training window.

By getting the right information, even if it's big and ugly, and including it in the prompt, the LLM can understand the user's question, parse through a massive amount of information, and use that to respond better.

You're letting the large language model understand what the user wants and giving it enough info to respond better than it could by itself.

When it comes to the context window, the amount of words you can feed into an LLM, is growing super fast. It used to be, even 10 months ago, if I were talking to the earlier versions of GPT-4, I would probably ask it 10 or so questions, and then it would kind of lose the plot. I would start a new conversation and pick up where we left off.

But now, you can have many hour-long technical conversations with it, and it'll track, maybe get a bit slower, but it'll still follow and won't go off the rails. It's really handling a lot more context well, but that's on the scale of a few-hour conversation. If you're looking at a whole documentation set or all of Wikipedia, it's still way outside what it can do.

Even top models that can handle massive amounts of context can handle them technically but not well. For example, I've seen tests where an Easter egg is hidden in a massive amount of context, and when asked, the model says it's not there. After a few tries, it finds it. You can feed it all this context, but it might not pay attention well to all of it.

That's the limitation of the context windows. I think those will get bigger and more reliable over time, but for now, it's better to give a smaller amount of accurate context. I'd rather give it three pages of really good context versus a hundred pages where only a couple of paragraphs are useful.

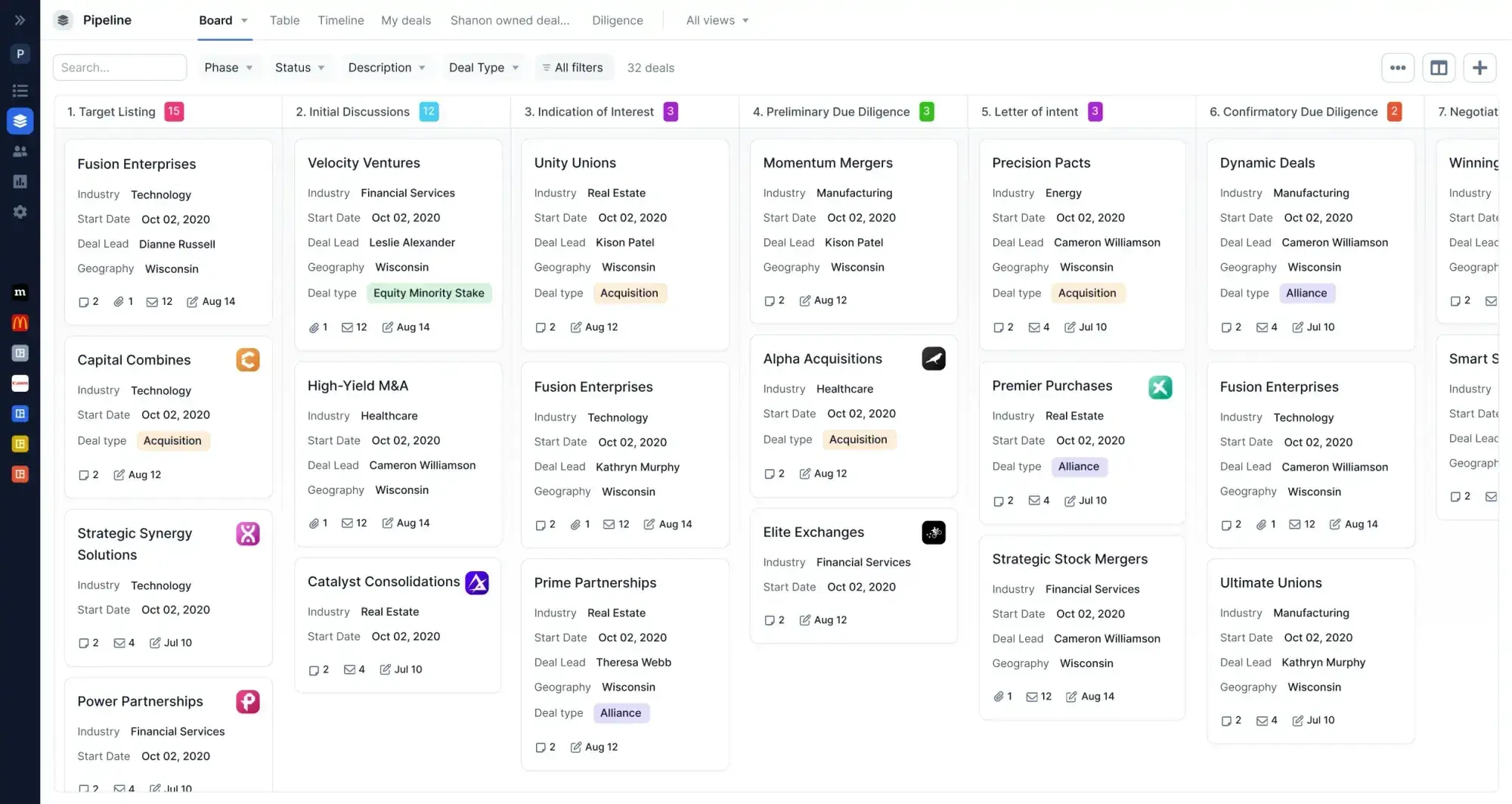

M.B: Yeah, when we're talking about a deal room for M&A, you've got multiple parties in there. You've got the lawyers, the printer, and the parties that are buying or selling. They're all going to be looking at contracts. One way that RAG could be super useful is summarizing what's in some of those contracts, especially if they're lengthy, which in M&A, they absolutely can be.

If you have a small context window, that's not going to help. If you don't have the details in these contracts, you need to augment the linguistic model. The idea with the deal room is to make it more efficient. Having RAG in conjunction with an LLM is critical.

Now, you can see what's relevant in those documents and how they will be validated along the way. This information is isolated to the deal room itself. If you let the language model operate in the deal room, you may not get the outcome you want because those models have only been trained on public data and not on the specifics of your deal room.

They will give an answer that sounds good, but it will be factually inaccurate to a greater degree for what a deal room would require.

Accuracy is always a concern. One way I'm thinking about it is summarization, which will help all parties understand what's in the documents. Another is mapping, understanding how one document or process could map to another, even in a deal room. That could be critical.

I'm no expert on deal rooms, but I have dealt with some of that in the past. Language models are really good at reading text and responding to it. RAGs just make that whole process a lot more efficient.

Large language models

M.B: They're useful in a lot of ways. The nice thing about LLMs is they deal with unstructured data, which represents 80 to 90 percent of all data created. This data also represents a problem that's been largely unsolved until now. If I can rely on an LLM to provide structured feedback based on unstructured data, that's really cool.

Before LLMs, with structured data, traditional machine learning required humans to think like a logical computation system. For instance, a SQL statement is not a natural language thing that we would do in communication. Instead, I want to ask in plain language for the LLM to provide that retrieval for me.

The LLM offloads the thinking to the model itself. Now, as the human, I don't need to think like a computer. The computation system emulates human-like thinking.

C.C: For me, LLMs excel at handling the grunt work. You still generally want a human in the loop, but if an LLM can make a human's process 80 percent faster and better, that's a huge win. LLMs can handle grunt work from the perspective of summarization and aggregation.

They can also serve as a companion to help you work through an idea. If I want to learn something, I no longer go to Google. I start a conversation with an LLM, get some info, and then have a back-and-forth dialogue to reach the idea or end result more effectively. This is a different way to engage with information, allowing for productive dialogues to work through ideas, concepts, and designs.

Discriminative vs Generative AI

M.B: Discriminative is probably the better way to describe classical or traditional machine learning. In the incipient phase of generative AI being launched wholesale through ChatGPT, we referred to traditional ML as what data scientists have called discriminative.

One approach is very quantitative and focused on structured or semi-structured data, while the other is generative, which not only filters information but also generates new information.

Mathematically, discriminative models take a subset of potential responses from a set of data, whereas generative models do that and also generate new responses. This is great for unstructured data. You're right on the money there.

Discriminative is probably the better way to describe classical or traditional machine learning. In the incipient phase of generative AI being launched wholesale through ChatGPT, we referred to traditional ML as what data scientists have called discriminative.

One approach is very quantitative and focused on structured or semi-structured data, while the other is generative, which not only filters information but also generates new information.

Mathematically, discriminative models take a subset of potential responses from a set of data, whereas generative models do that and also generate new responses. This is great for unstructured data.

C.C: I would think of discriminative AI as often used in classification. You would train a model specifically to recognize the sentiment of an Amazon review, for example, categorizing it as positive or negative. This is how most traditional machine learning models functioned.

Large language models (LLMs) do much more. They're trained on so much information that they can handle many tasks well without specific training. With an LLM, you can give instructions to classify sentiment without needing purpose-built training. They know so much that they're able to handle it.

M.B: Yeah, like I said before, you filter out data and then generate new data. Classification techniques would definitely learn from that and be useful even in generative models. Clustering, classification, regression, prediction, and prescription are elements in the domain of discriminative AI. These can still be used by generative models because you are predicting the next token that will appear.

Generative models use different types of technology, like transformers, which are completely different from classifiers. The idea of using these techniques in tandem is where the value is. You use quantitative and qualitative methods, structured and unstructured data together, and bring these models together as ensembles. This creates pipelines for better outcomes.

This is why it's important to fine-tune, have RAG, and have agents purpose-built to fetch certain data or perform certain functions. These feed back into what the models will ultimately do to lead to an outcome.

Fine tuning

C.C: Fine-tuning can be differentiated from pre-training. Pre-training is when you give it the internet and teach it to predict the next word. Fine-tuning, in the sense of a big model, makes it respond to questions instead of generating a follow-on question.

As a builder, I find that the fine-tuning I can do usually happens because I don't have the compute power to do pre-training myself. I can take a model and fine-tune it to turn it into a discriminative piece of functionality, like classification or routing. In my experience, fine-tuning is best today at modifying the tone of how a model responds, not overriding the facts of the underlying model.

I would also add prompting into this. Using good prompting and instructions tells the model what to do. Good retrieval lets the model know what I want it to know. Fine-tuning makes the model sound how I want it to sound. I use these in different ways. For me, fine-tuning is not that useful today.

There are impressive fine-tuning efforts by companies like OpenAI and Anthropic for security, answering, reasoning, etc. However, on my scale, I prefer using retrieval to provide the model with more information and context rather than trying to override the underlying facts with fine-tuning.

M.B: Yeah, I love that answer on so many levels. The only thing I would add is that there's a trade-off at some point between doing retrieval and fine-tuning. These areas could be very domain-specific responses.

For example, if you take a fine-tuned model for tone, and you want your tone to sound like an auditor, you would fine-tune the model with auditing-type language and nomenclature, giving it a corpus of data that matches the type of actor performing as an auditor.

You may end up using both approaches. You might not want to use the large linguistic model to answer routine questions that only an auditor would repeatedly handle. In such cases, a fine-tuned model can retrieve the right data in the process.

C.C: The instructions you're giving it are good prompting, telling it what you want it to do. The documents you're showing it are the retrieval, where you've fetched the relevant documents. That's probably not fine-tuning.

I think of fine-tuning as modifying a copy of the model. Instead of calling it the LLaMA 7 billion model, I'm calling it the Chris Capetta May 8th copy of the LLaMA 7 billion model. It's fundamentally changing and getting a different copy of the large language model.

Fine-tuning will become stronger in the years to come. Using Boomi to pipe the data in, format it, and prepare it will continue to be a good pipeline for that data as fine-tuning becomes more useful.

M.B: I often think of fine-tuning, like you just said, as taking the LLaMA 7B model or a smaller variant and then creating a Chris Capetta variant on that. I can see fine-tuning as multiplying ourselves and our agency, with fine-tuned models for each of us to interact with the world in our voice in various contexts.

This will likely involve a combination of retrieval and fine-tuning. With a linguistic model, the key is converting syntax to semantics. If you're constructing a sentence to derive meaning, language models excel at that. Putting it in your voice will be a significant frontier where fine-tuning will be helpful.

Agents

M.B: I think of agents as functions. In Amazon AWS, their Lambda functions are task-based, such as fetchers, putters, and getters. These functions are ephemeral; they perform a particular purpose and then either go away or become latent. Fundamentally, as an infrastructure person, I see agents as task executioners.

C.C: Agents is a term that has really grown in the last year or so. The technical definition of an agent could be anything with generative AI using tools. There are many agents out there doing cool things. The most exciting element of agents is when they have higher-level goals and make decisions on how to achieve those goals.

You could imagine a scenario where you give an agent or a swarm of agents a high-level directive, like addressing food shortage in a nation. The agents would have the autonomy, access, decision-making, and toolset to decide what goals go into that initiative and what tools are needed.

There are varying degrees of agents, from low-level decision-making AIs with access to external tools, to advanced systems like HAL 9000, but hopefully a nicer version.

M.B: We talk about this all the time, but making sure that results are presented in the right sequence is crucial. If it's unnecessary to sequence tasks and they can be performed in parallel, that's fine too. It depends on the overarching goal. The cool thing is the layers of abstraction on top of that could be constructivist.

These agents could figure out how to coordinate different levels of abstraction to present interesting outcomes, like booking a flight. An orchestration agent could determine which agent class fetches the necessary data to book the flight. You need to know the sequence, like knowing the price before paying. The parameters could come from a prompt or a fine-tuned model that knows your persona, travel preferences, and restrictions.

All of that needs to be orchestrated at different levels. If you can present a goal and allow agents to do the planning and logic, you're making the LLM more self-aware. Yann LeCun, one of AI's top researchers, says LLMs are dumb because they don't plan or reason today. Agents, in my view, allow for a step closer to planning and reasoning.

Maybe one day, LLMs will be sophisticated enough to determine what kind of agency is necessary to achieve a goal. Right now, we're not there, so we need to set up the pipelines for the LLM.

C.C: I'd add to that from a tactical perspective. There are several big AI models starting to build in functionality with tools. OpenAI has a function call and an assistance API, and Anthropic has rolled out tools on Claude. These are nifty for what they can do. However, it seems the versions of the model built to use these tools are almost too focused on that.

I prefer to split out the mechanism that needs a tool from the mechanism that turns unstructured natural language into a structured payload. I like this very distilled, without any insightful actions as part of it.

I would rather prompt regular chat completions to make a plan, then use a version of the model to turn that plan into structured JSON, rather than having it make a plan and give it to me as structured JSON, as it seems to trip over itself.

Model vendors are starting to build in a concept of tools, which is interesting but limited. They seem to think of a tool as a specific API call to an external system. A more interesting use of a tool is as a full-on process that can do many things with predictable input and output.

A tool should resolve to either success or failure, and the higher-level model can react from there. I think of a tool as having significant potential for process orchestration, integration, and automation, which many model vendors are not yet considering.

That's how I would think of agents tactically today in early 2024, as the date should be on anything we're discussing.

Real life use cases of AI

C.C: Yeah, Retrieval Augmented Generation (RAG) keeps coming up because it's practical. The models are really good, but they just don't have the data. RAG is probably the top use case. It's familiar, and chatbots can be made better with it.

Summarization is another fascinating one. If you've got large technical documents to go through, asking a large language model to summarize them can be very valuable. Reading the summary can help you get more out of the document than just pouring through it.

There are intriguing areas of classification and keyword generation, using large language models in creative ways. For example, generating synthetic questions, keywords, or part IDs. Multi-channel, particularly voice, is a fascinating angle where you can have verbal conversations with entities that help you work through ideas.

We will see scenarios where people relate closely to these models as companions. Even the voice conversation in the ChatGPT tool is a cool angle, and we will see a continuation of that.

One other area that has been intriguing is SIM-to-real training, where you train a robot in a simulation and then transfer that knowledge to a real-world robot. It's fascinating because an LLM can run the simulator training at a scale that a human couldn't.

It can run through tens of thousands of parameters objectively, and then you transfer that knowledge to the robot, and it runs. This isn't something we're focused on at Boomi, but it's an intriguing concept that's been on my mind.

M.B: We have fun playing with stuff too. I want to get into a variety of different use cases that we're actually going to market with now. One thing I do want to mention is Chris's work.

He built a conversational way to talk to Claude or any large language model, where we're both interviewing the LLM directly, providing it with voice transcription services, and having interactive communication with our chat entity. We can have existential conversations with it, which is really fun.

At an AI conference last week, we showcased this, and I want to give a shout-out to Chris for that. In terms of practical Boomi use cases that we're going to market with, one example is replacing keyword search and finding ways for customers to have an interactive experience to offload the time humans spend on servicing requests that could be automated.

Another use case is writing copy. From a marketing standpoint, the ability to write new copy and have formatting templates via prompting is real and useful right now.

Sales interaction with potential customers and having very targeted messaging, knowing what their end user customer is going to look like, and what type of email or communication to send to them to create higher open rates are other examples.

Summarization is another prolific use case. Chatbots using methods that no longer require keyword search to get real information back are real things that are happening. In fact, we just had a presentation today by a customer who did exactly that with Boomi.

C.C: One other thought from a practical perspective that occurred to me is that practical tasks are often multi-step. Large language models are powerful for what they do, as are vector databases and similar technologies. Within their boundaries, you could have five steps of interesting tasks and end up with something more interesting than any single step.

For example, you might have an initial step using a large language model to decide on a route, then summarize the issue, retrieve the info, determine which info is useful, and finally ask the question or generate the audio.

From a practical perspective, you can extend the capabilities of these technologies by stringing together multiple uses within their boundaries to achieve something they couldn't do in a single attempt right now.

M.B: One other thing, now that we're riffing, this is getting really cool. Our third-party partners and agent framework allow us to expose the value of their in situ AI.

For example, imagine if you could have a risk score applied to your supply chain as different environmental changes happen—government problems, supply chain breakdowns, changes in weather patterns, or cargo ships going sideways in the Suez Canal.

Having up-to-date ways to risk score your raw material supply chain or your finished product sent to the next stage of the supply chain is something we do today with some of our partners in our agent framework. Another use case is routing to different types of LLM models. We can provide data pipelines that route to the right model for the right question at the right time with the right performance.

For instance, predicting how many students will actually be sitting in a classroom in the fall who agreed to attend university in the spring or determining the payment schedules of accounts receivable with good confidence. These are all practical AI purposes we can achieve today with our framework and our wider partner ecosystem.

M&A Software for optimizing the M&A lifecycle- pipeline to diligence to integration

Explore dealroom

Help shape the M&A Science Podcast!

Take a quick survey to share what you enjoy, areas for improvement, and topics you’d like us to feature. Here’s to to the Deal!

_Easy-Resize.com.jpg)